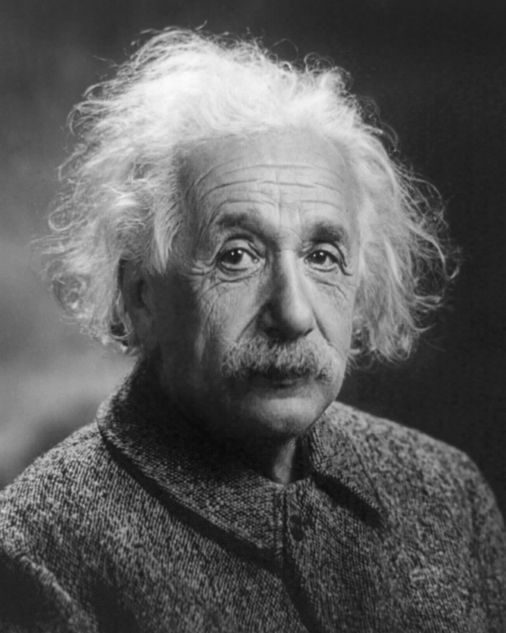

Late last year, an experiment carried out by scientists at the Delft University of Technology in the Netherlands appeared to demonstrate that one object can affect another from afar without any physical interaction between the two. The finding confirmed an idea so extraordinary that, nearly a century ago, Albert Einstein had rejected it with the dismissive phrase “spooky action at a distance.” In quantum theory this phenomenon is known as “entanglement,” and many physicists now regard it as the most profound and important characteristic of the physical world at the smallest scales, which quantum theory describes.

Quantum entanglement is a deeply counterintuitive idea, which seems to contradict human experience of the physical world at the most essential level. In the everyday (“classical”) physical realm, objects affect one another via some kind of contact. The tennis ball flies from the racquet when struck, and when it hits the window the glass will smash. Sure, “invisible forces” seem to act across space—magnetic and electrical attraction and repulsion, say. But in quantum theory these interactions arise from the passage of a particle—a photon of light—between the two interacting bodies. Meanwhile, Einstein showed that the Sun’s gravity corresponds to a distortion of space, to which distant objects such as Earth respond. It’s generally believed that in a quantum theory of gravity (which doesn’t yet exist), this picture will prove to be equivalent to the exchange of “gravity particles” or gravitons between the Sun and Earth.

But quantum entanglement bothered Einstein because it suggested that one particle could affect another even when there was no conceivable physical interaction between them. It didn’t matter if those particles were light years apart—measuring a property of one particle would, according to quantum theory, instantly affect the properties of the other. How could that be?

Entanglement isn’t just another of the strange features of the quantum world, alongside the idea that quantum particles are also waves and that they can be in two places at once, and so on. Rather, entanglement is arguably the central mystery of quantum theory. Pretty much everything that seems odd about the theory is encapsulated in entanglement, an idea that now stands at the very limits of our ability to understand the physical world.

And it is not just an abstruse theoretical diversion. Experimental physicists and engineers are taking an increasing interest in ever more complex examples of entanglement. In June, physicists at the University of Science and Technology of China in Hefei announced that they had created a state in which 10 photons had been entangled. The production and manipulation of such states is driving the emergence of quantum information technology, of which quantum computing is the most enticing facet. In effect, entanglement seems to be a kind of resource on which we can draw to do things to and with information that can’t be done with classical physics—to compute faster, say, and to encrypt data more securely. Entanglement embodies a different kind of logic to the one we’re used to: a quantum logic that undermines conventional ideas about time and space. To fully harness quantum entanglement as a tool for computation and for the manipulation of data would be a technological leap so great that it would make possible things that are at present inconceivable.

Though his name is often associated with his theories of relativity, Einstein also launched quantum theory. In 1905 he argued that the division of energy into discrete packets or “quanta,” which Max Planck, the German theoretical physicist, had proposed five years earlier as a mathematical convenience for describing the thermal vibrations of atoms, was a real phenomenon. Einstein applied the quantum idea to light itself, which he said is apportioned into particle-like entities called photons. As the “quantisation” of light, atoms and subatomic particles developed into a clearly formulated quantum theory in the 1920s, it became apparent that it dealt only in probabilities, not deterministic certainties. Unlike classical mechanics, quantum mechanics didn’t tell you what you would measure in an experiment, but only the likelihood of different possible outcomes.

According to the view developed by Niels Bohr, Werner Heisenberg and other physicists in Copenhagen in the 1920s, one had to predict the outcomes of experiments probabilistically not because one didn’t have enough detailed information to do any better, but because that was all there was to know. A measurement could produce a definite value—for the position of a particle, say—but before you make the measurement it is meaningless to ask “where” the particle is. In other words, measurements don’t show you were the particle is; they determine it.

This is the essence of what became known as the Copenhagen interpretation of quantum mechanics. The point of the theory, Bohr said, is not to tell us how the world is, but to predict what we can measure about it. And we can only do that statistically: a 50:50 chance of this or that, say. In this view, the common idea that quantum particles can be in two places at once misses the point. We shouldn’t say that the particle is both here and there before we measure it; rather, we must say that, before the observation, the notion of the particle having a precise position, or indeed a position at all, is meaningless.

According to quantum theory, it is possible to place two particles in a state in which they are described by a single mathematical function called a wavefunction. This quantum wavefunction specifies how the two values of some property of the particles are related, without specifying what those values are.

To see what that means, consider the quantum property called spin. This is a peculiar thing with no classical analogue, but all you need to know is that quantum spin makes the particle a little like a bar magnet, with north and south poles oriented in some direction. In the classical world a magnet can point in any direction, but in the quantum world a spin may be constrained to just two directions: it could point “up,” say, or “down.”

We can prepare two particles in a way that compels their spins to point in opposite directions: if one is up, the other must be down. We can’t specify which is which, but we know for sure that they are correlated. So if we measure the spin of one particle and find it is up, we know the other must be down. Now suppose, said Einstein, Podolsky and Rosen, that our apparatus producing these particles spits them out in opposite directions. Then after they have separated a good distance, we measure the spin of one of them and find it is up. We can now be sure that the other is down.

That sounds unremarkable. We could say the same of a pair of gloves sent separately through the post to me and you: if I get the right-hand glove, I know at once, without checking, that you have the left. But according to the Copenhagen interpretation, the spins of the particles, unlike the handedness of the gloves, were not determined until we observed one of them. How, then, can the measurement on one particle not only decide which spin it has but also which spin the other one has too—even if it is miles or maybe light years away?

Yet quantum mechanics, Einstein and colleagues pointed out, does insist that this is what happens: there seems to be instant (or as Einstein said, “spooky”) action at a distance. That can’t be right, they argued, because Einstein’s theory of special relativity had conclusively shown that no signal could travel faster than light. In consequence, something must be missing from quantum theory. For Einstein, the missing ingredients were the hidden variables that would somehow assign a definite value to each of the spins all along.

All that changed in 1964 when an Irishman named John Bell, a particle physicist at Cern, the centre for high-energy physics in Geneva, reformulated Einstein’s thought experiment in a way that suggested how it might be conducted for real. Bell showed how repeated measurements of the correlations between entangled particles could reveal whether there were indeed, as Einstein suggested, hidden variables underlying quantum mechanics.

The first to attempt Bell’s experiment were John Clauser and Stuart Freedman at the University of California at Berkeley, whose 1972 study of entangled photon pairs emitted simultaneously from calcium atoms favoured the quantum mechanical view over Einsein’s hidden variables. But those results weren’t entirely clear-cut. The first definitive demonstration that entangled particles behave just as quantum mechanics says they should was conducted in 1982 by Alain Aspect and co-workers at the University of Paris in Orsay, who used laser and fibre-optic technologies to generate and manipulate entangled photons.

If the quantum-mechanical picture is correct, and the properties of two entangled particles are indeterminate until one is measured, it looks indeed as if there is instantaneous communication between them: the unobserved particle seems to “know” at once which spin the measurement on the other has produced. Contrary to what Einstein thought, however, that doesn’t violate relativity. Even though the correlation is manifested instantly, we can never establish that this is so without exchanging some conventional signal between the locations of the two particles. So we can’t use entanglement to communicate faster than light—and that is all that special relativity prohibits.

All the same, how can one particle affect the other without any interaction between them? For years, noone could find fault with Einstein, Podolsky and Rosen’s reasoning: it seemed to rest on unassailable assumptions. But in quantum theory, what looks like common sense might turn out to be wrong. Einstein and his colleagues made the perfectly reasonable assumption of locality: that the properties of a particle are localised on that particle, and what happens here can’t affect what happens there without some way of transmitting the effects across the intervening space.

But nature simply doesn’t work this way at the quantum level. We can’t regard the two particles in Einstein’s thought experiment as separate entities; even though they are separated by space, they are both parts of a single object. Or to put it another way, the spin of particle A is not located solely on A in the way that the redness of a cricket ball is located on the cricket ball. In quantum mechanics, properties can be “non-local.” Only if we accept Einstein’s assumption of locality do we need to tell the story in terms of a measurement on particle A “influencing” the spin of particle B. Quantum non-locality, which is just what the entanglement experiments have verified, is the alternative to that view.

That’s why “spooky action at a distance” is precisely what entanglement is not—nothing is “acting” across the intervening space. Some quantum physicists tear their hair out at the way Einstein’s catchy phrase is nonetheless recycled by the media. Others accept it wearily as a price that must be paid for communicating what entanglement is about. Talk of “quantum non-locality,” offers scant intuitive purchase; we lack ordinary words or metaphors to say what it means.

That’s frustrating, because the non-locality of entanglement does seem to be the defining aspect of quantum mechanics. You can regard it as an extension of the principle of quantum superposition, which says that—to put it crudely—a particle may seem to be in two (or more) states at once until measurement forces a “choice” between them. Entanglement is that same idea applied to two or more particles. Although the particles are separated, they must be considered a single state. Entanglement shows us that in the quantum world separation in space does not guarantee independence, even though no measurable interaction exists between the two places. It makes a mockery of space. And as if that’s not enough, other experiments on entanglement and superstitions seem to traduce time too: it looks as if a measurement at one moment affects the state of the quantum system at an earlier time.

The experiment conducted last year in the Netherlands is yet another verification of quantum non-locality. Why do we need that, more than three decades after Aspect’s result? The reason is that Aspect didn’t completely settle the issue: there were several loopholes in the argument that could preserve a local hidden-variables theory. For example, what if there really was some influence that transmitted the effect of measuring one particle very quickly to the other? This “communication loophole” was ruled out in 1998. Or what if some process happening at the source of the entangled particles gave them definite spins from the outset, but somehow influenced the measurement process to mask it? That loophole was closed in 2010 in a remarkable experiment that placed the particle sources and one of the detectors on separate islands in the Canaries.

These loopholes might sound like grasping at straws, but in quantum mechanics such things require painstaking testing. The big excitement about the Dutch experiment stemmed from the fact that it shuts the door even more firmly on Einstein’s idea of hidden variables. In an ingenious tour-de-force, the Delft team, led by the physicist Ronald Hanson, made measurements on entangled electrons, which can be more reliably detected than photons of light (so ruling out the loophole that one is just seeing an unrepresentative subset of entangled particles). And to exclude the communication loophole it linked the electrons’ entanglement to that between photons, which can be transmitted over long distances, in this case 1.3km, along optical fibres.

The reality of entanglement and quantum non-locality has potentially dramatic ramifications. One speculative idea is that entanglement might be the key to the longstanding mystery of how to reconcile quantum mechanics with Einstein’s theory of general relativity, which describes gravity. In this view, perhaps entanglement is what stitches together the very fabric of spacetime.

Entanglement is useful too. In an increasingly networked world, with confidential information racing back and forth along optical fibres, it can be used to make telecommunications secure and keep data safe from prying eyes. By sending data encoded in entangled photons, it becomes impossible even in principle for anyone to intercept and read it without the eavesdropping being detected. The mere act of looking alters the data. This quantum cryptography was used—more as a proof of principle than as a necessary precaution—to send results of the Swiss federal election in 2007 from a data entry centre to the government repository in Geneva. Commercial quantum-cryptography systems are now marketed by several companies, and China has just begun installing a fibre-optic quantum communications network for secure transmission of government and financial data.

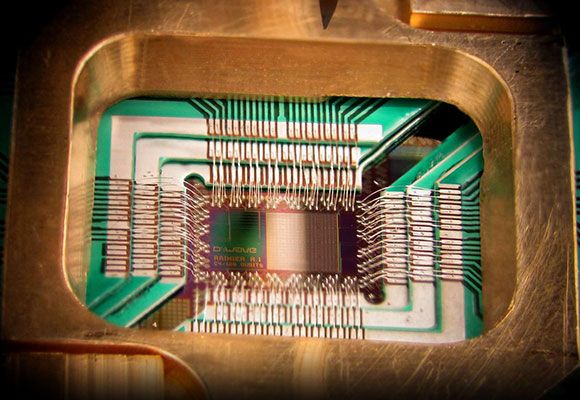

Ironically, one of the main reasons why this new security method is needed is that the other star turn of quantum IT—quantum computers—could make old techniques obsolete. Encryption of banking and personal data for online transactions is currently achieved by encoding the data as factors of numbers too big to factorise with any of today’s computers, unless you know the encryption key. But rapid factorisation is one of the things a quantum computer does spectacularly well.

Quantum computers are already starting to leave the benchtop and enter the IT industry. Google has a team on the job at its Californian campus at Mountain View, and Ronald Hanson at Delft is part of a government-backed effort called the QuTech Centre. In May, IBM showcased its chip-based quantum processor containing five entangled bits (qubits; a bit is a unit of information). It is now available to the public via a cloud-based platform called IBM Quantum Experience. It can’t really do anything that your laptop can’t already, but IBM expects medium-sized quantum processors of 50-100 qubits to be possible in the next decade. “With a quantum computer built of just 50 qubits,” the company says, “none of today’s top 500 supercomputers could successfully emulate it.” Some researchers say that it is already time to start thinking about building a quantum internet, which will harness entanglement to make information transfer faster, more efficient and more secure.

The much vaunted “spooky action at a distance,” is, then, neither spooky nor action. Instead, it’s one of the strangest characteristics of the physical world—and may yet turn out to be one of the most revelatory.

Quantum entanglement is a deeply counterintuitive idea, which seems to contradict human experience of the physical world at the most essential level. In the everyday (“classical”) physical realm, objects affect one another via some kind of contact. The tennis ball flies from the racquet when struck, and when it hits the window the glass will smash. Sure, “invisible forces” seem to act across space—magnetic and electrical attraction and repulsion, say. But in quantum theory these interactions arise from the passage of a particle—a photon of light—between the two interacting bodies. Meanwhile, Einstein showed that the Sun’s gravity corresponds to a distortion of space, to which distant objects such as Earth respond. It’s generally believed that in a quantum theory of gravity (which doesn’t yet exist), this picture will prove to be equivalent to the exchange of “gravity particles” or gravitons between the Sun and Earth.

But quantum entanglement bothered Einstein because it suggested that one particle could affect another even when there was no conceivable physical interaction between them. It didn’t matter if those particles were light years apart—measuring a property of one particle would, according to quantum theory, instantly affect the properties of the other. How could that be?

Entanglement isn’t just another of the strange features of the quantum world, alongside the idea that quantum particles are also waves and that they can be in two places at once, and so on. Rather, entanglement is arguably the central mystery of quantum theory. Pretty much everything that seems odd about the theory is encapsulated in entanglement, an idea that now stands at the very limits of our ability to understand the physical world.

And it is not just an abstruse theoretical diversion. Experimental physicists and engineers are taking an increasing interest in ever more complex examples of entanglement. In June, physicists at the University of Science and Technology of China in Hefei announced that they had created a state in which 10 photons had been entangled. The production and manipulation of such states is driving the emergence of quantum information technology, of which quantum computing is the most enticing facet. In effect, entanglement seems to be a kind of resource on which we can draw to do things to and with information that can’t be done with classical physics—to compute faster, say, and to encrypt data more securely. Entanglement embodies a different kind of logic to the one we’re used to: a quantum logic that undermines conventional ideas about time and space. To fully harness quantum entanglement as a tool for computation and for the manipulation of data would be a technological leap so great that it would make possible things that are at present inconceivable.

Though his name is often associated with his theories of relativity, Einstein also launched quantum theory. In 1905 he argued that the division of energy into discrete packets or “quanta,” which Max Planck, the German theoretical physicist, had proposed five years earlier as a mathematical convenience for describing the thermal vibrations of atoms, was a real phenomenon. Einstein applied the quantum idea to light itself, which he said is apportioned into particle-like entities called photons. As the “quantisation” of light, atoms and subatomic particles developed into a clearly formulated quantum theory in the 1920s, it became apparent that it dealt only in probabilities, not deterministic certainties. Unlike classical mechanics, quantum mechanics didn’t tell you what you would measure in an experiment, but only the likelihood of different possible outcomes.

According to the view developed by Niels Bohr, Werner Heisenberg and other physicists in Copenhagen in the 1920s, one had to predict the outcomes of experiments probabilistically not because one didn’t have enough detailed information to do any better, but because that was all there was to know. A measurement could produce a definite value—for the position of a particle, say—but before you make the measurement it is meaningless to ask “where” the particle is. In other words, measurements don’t show you were the particle is; they determine it.

This is the essence of what became known as the Copenhagen interpretation of quantum mechanics. The point of the theory, Bohr said, is not to tell us how the world is, but to predict what we can measure about it. And we can only do that statistically: a 50:50 chance of this or that, say. In this view, the common idea that quantum particles can be in two places at once misses the point. We shouldn’t say that the particle is both here and there before we measure it; rather, we must say that, before the observation, the notion of the particle having a precise position, or indeed a position at all, is meaningless.

"Entanglement is arguable the central mystery of quantum theory. It is an idea that now stands at the limits of our ability to understand the physical world"All this was a jarring break from the past, when objects were assumed to have particular properties and locations irrespective of whether we look at them. Einstein objected to the Copenhagen interpretation because it undermined the idea of an objective reality. It was from Einstein’s attempt to restore definite, observer-independent properties to quantum particles that the notion of quantum entanglement emerged. He was convinced that, underlying quantum theory, there was a deeper reality in which all objects could be described by variables with particular values, even if these are somehow “hidden” from direct measurement. For Einstein, quantum theory was in this sense incomplete, and its use of probability was a consequence of imperfect knowledge. In 1935 Einstein and two young colleagues, Nathan Rosen and Boris Podolsky, described a thought experiment that attempted to show why these hidden variables, which they said underpinned quantum theory, were needed.

According to quantum theory, it is possible to place two particles in a state in which they are described by a single mathematical function called a wavefunction. This quantum wavefunction specifies how the two values of some property of the particles are related, without specifying what those values are.

To see what that means, consider the quantum property called spin. This is a peculiar thing with no classical analogue, but all you need to know is that quantum spin makes the particle a little like a bar magnet, with north and south poles oriented in some direction. In the classical world a magnet can point in any direction, but in the quantum world a spin may be constrained to just two directions: it could point “up,” say, or “down.”

We can prepare two particles in a way that compels their spins to point in opposite directions: if one is up, the other must be down. We can’t specify which is which, but we know for sure that they are correlated. So if we measure the spin of one particle and find it is up, we know the other must be down. Now suppose, said Einstein, Podolsky and Rosen, that our apparatus producing these particles spits them out in opposite directions. Then after they have separated a good distance, we measure the spin of one of them and find it is up. We can now be sure that the other is down.

That sounds unremarkable. We could say the same of a pair of gloves sent separately through the post to me and you: if I get the right-hand glove, I know at once, without checking, that you have the left. But according to the Copenhagen interpretation, the spins of the particles, unlike the handedness of the gloves, were not determined until we observed one of them. How, then, can the measurement on one particle not only decide which spin it has but also which spin the other one has too—even if it is miles or maybe light years away?

Yet quantum mechanics, Einstein and colleagues pointed out, does insist that this is what happens: there seems to be instant (or as Einstein said, “spooky”) action at a distance. That can’t be right, they argued, because Einstein’s theory of special relativity had conclusively shown that no signal could travel faster than light. In consequence, something must be missing from quantum theory. For Einstein, the missing ingredients were the hidden variables that would somehow assign a definite value to each of the spins all along.

"Entanglement shows us that in the quantum world separation in space does not guarantee independence"When Einstein, Podolsky and Rosen presented their thought experiment, Erwin Schrödinger the Austrian physicist who invented quantum wavefunctions, recognised at once its central importance in quantum theory (he also coined the term “entanglement”). Quantum theory appeared to work perfectly, yet this thought experiment pointed to apparently impossible conclusions. No one knew how to resolve it, and for decades it was brushed under the rug.

All that changed in 1964 when an Irishman named John Bell, a particle physicist at Cern, the centre for high-energy physics in Geneva, reformulated Einstein’s thought experiment in a way that suggested how it might be conducted for real. Bell showed how repeated measurements of the correlations between entangled particles could reveal whether there were indeed, as Einstein suggested, hidden variables underlying quantum mechanics.

The first to attempt Bell’s experiment were John Clauser and Stuart Freedman at the University of California at Berkeley, whose 1972 study of entangled photon pairs emitted simultaneously from calcium atoms favoured the quantum mechanical view over Einsein’s hidden variables. But those results weren’t entirely clear-cut. The first definitive demonstration that entangled particles behave just as quantum mechanics says they should was conducted in 1982 by Alain Aspect and co-workers at the University of Paris in Orsay, who used laser and fibre-optic technologies to generate and manipulate entangled photons.

If the quantum-mechanical picture is correct, and the properties of two entangled particles are indeterminate until one is measured, it looks indeed as if there is instantaneous communication between them: the unobserved particle seems to “know” at once which spin the measurement on the other has produced. Contrary to what Einstein thought, however, that doesn’t violate relativity. Even though the correlation is manifested instantly, we can never establish that this is so without exchanging some conventional signal between the locations of the two particles. So we can’t use entanglement to communicate faster than light—and that is all that special relativity prohibits.

All the same, how can one particle affect the other without any interaction between them? For years, noone could find fault with Einstein, Podolsky and Rosen’s reasoning: it seemed to rest on unassailable assumptions. But in quantum theory, what looks like common sense might turn out to be wrong. Einstein and his colleagues made the perfectly reasonable assumption of locality: that the properties of a particle are localised on that particle, and what happens here can’t affect what happens there without some way of transmitting the effects across the intervening space.

But nature simply doesn’t work this way at the quantum level. We can’t regard the two particles in Einstein’s thought experiment as separate entities; even though they are separated by space, they are both parts of a single object. Or to put it another way, the spin of particle A is not located solely on A in the way that the redness of a cricket ball is located on the cricket ball. In quantum mechanics, properties can be “non-local.” Only if we accept Einstein’s assumption of locality do we need to tell the story in terms of a measurement on particle A “influencing” the spin of particle B. Quantum non-locality, which is just what the entanglement experiments have verified, is the alternative to that view.

That’s why “spooky action at a distance” is precisely what entanglement is not—nothing is “acting” across the intervening space. Some quantum physicists tear their hair out at the way Einstein’s catchy phrase is nonetheless recycled by the media. Others accept it wearily as a price that must be paid for communicating what entanglement is about. Talk of “quantum non-locality,” offers scant intuitive purchase; we lack ordinary words or metaphors to say what it means.

That’s frustrating, because the non-locality of entanglement does seem to be the defining aspect of quantum mechanics. You can regard it as an extension of the principle of quantum superposition, which says that—to put it crudely—a particle may seem to be in two (or more) states at once until measurement forces a “choice” between them. Entanglement is that same idea applied to two or more particles. Although the particles are separated, they must be considered a single state. Entanglement shows us that in the quantum world separation in space does not guarantee independence, even though no measurable interaction exists between the two places. It makes a mockery of space. And as if that’s not enough, other experiments on entanglement and superstitions seem to traduce time too: it looks as if a measurement at one moment affects the state of the quantum system at an earlier time.

The experiment conducted last year in the Netherlands is yet another verification of quantum non-locality. Why do we need that, more than three decades after Aspect’s result? The reason is that Aspect didn’t completely settle the issue: there were several loopholes in the argument that could preserve a local hidden-variables theory. For example, what if there really was some influence that transmitted the effect of measuring one particle very quickly to the other? This “communication loophole” was ruled out in 1998. Or what if some process happening at the source of the entangled particles gave them definite spins from the outset, but somehow influenced the measurement process to mask it? That loophole was closed in 2010 in a remarkable experiment that placed the particle sources and one of the detectors on separate islands in the Canaries.

These loopholes might sound like grasping at straws, but in quantum mechanics such things require painstaking testing. The big excitement about the Dutch experiment stemmed from the fact that it shuts the door even more firmly on Einstein’s idea of hidden variables. In an ingenious tour-de-force, the Delft team, led by the physicist Ronald Hanson, made measurements on entangled electrons, which can be more reliably detected than photons of light (so ruling out the loophole that one is just seeing an unrepresentative subset of entangled particles). And to exclude the communication loophole it linked the electrons’ entanglement to that between photons, which can be transmitted over long distances, in this case 1.3km, along optical fibres.

The reality of entanglement and quantum non-locality has potentially dramatic ramifications. One speculative idea is that entanglement might be the key to the longstanding mystery of how to reconcile quantum mechanics with Einstein’s theory of general relativity, which describes gravity. In this view, perhaps entanglement is what stitches together the very fabric of spacetime.

Entanglement is useful too. In an increasingly networked world, with confidential information racing back and forth along optical fibres, it can be used to make telecommunications secure and keep data safe from prying eyes. By sending data encoded in entangled photons, it becomes impossible even in principle for anyone to intercept and read it without the eavesdropping being detected. The mere act of looking alters the data. This quantum cryptography was used—more as a proof of principle than as a necessary precaution—to send results of the Swiss federal election in 2007 from a data entry centre to the government repository in Geneva. Commercial quantum-cryptography systems are now marketed by several companies, and China has just begun installing a fibre-optic quantum communications network for secure transmission of government and financial data.

Ironically, one of the main reasons why this new security method is needed is that the other star turn of quantum IT—quantum computers—could make old techniques obsolete. Encryption of banking and personal data for online transactions is currently achieved by encoding the data as factors of numbers too big to factorise with any of today’s computers, unless you know the encryption key. But rapid factorisation is one of the things a quantum computer does spectacularly well.

Quantum computers are already starting to leave the benchtop and enter the IT industry. Google has a team on the job at its Californian campus at Mountain View, and Ronald Hanson at Delft is part of a government-backed effort called the QuTech Centre. In May, IBM showcased its chip-based quantum processor containing five entangled bits (qubits; a bit is a unit of information). It is now available to the public via a cloud-based platform called IBM Quantum Experience. It can’t really do anything that your laptop can’t already, but IBM expects medium-sized quantum processors of 50-100 qubits to be possible in the next decade. “With a quantum computer built of just 50 qubits,” the company says, “none of today’s top 500 supercomputers could successfully emulate it.” Some researchers say that it is already time to start thinking about building a quantum internet, which will harness entanglement to make information transfer faster, more efficient and more secure.

The much vaunted “spooky action at a distance,” is, then, neither spooky nor action. Instead, it’s one of the strangest characteristics of the physical world—and may yet turn out to be one of the most revelatory.